(Originally published as a LinkedIn article 10th February 2021)

Within Atos I lead an AWS focussed community interested in training, certification and working with AWS technologies with our customers, our AWS Coaching Hub.

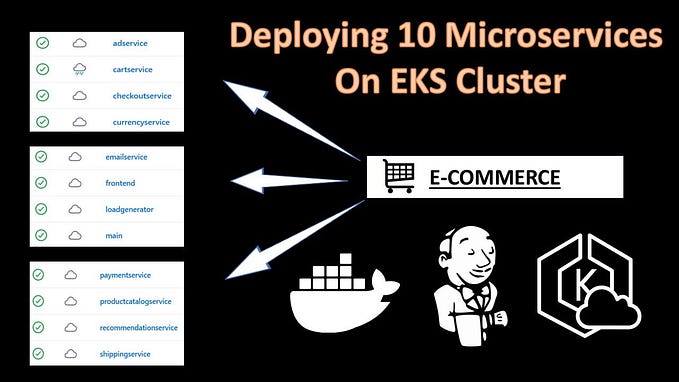

I’m keen this community doesn’t use certification as the end of the journey, it’s merely the beginning. Within an organisation like Atos it can be difficult for staff to feel confident having only completed some courses and taken certifications to start working on customer projects. As well as providing support to each other on assignments I’ve also used the AWS Coaching Hub to arrange hands on training where people can get in-depth on AWS services. As part of this we’ve just ran our second AWS Gameday, this time focusing on microservices as we build on the usage and knowledge of the foundational services like EC2, EBS and VPCs.

We had a scenario where our teams had to pick up an existing environment, with disgruntled employees had left the day before. The solution was an API driven two sided market where teams had to both publish applications via an API for other teams to consume, as well as consume other teams APIs as part of their own applications, whilst delivering an application to the end customers.

Numerous AWS native services were used, way beyond the theory in training material. API gateway, Lambda, Fargate, Elastic Beanstalk, Auto-scaling and DynamoDB were all in the mix, running a number of python based applications. Once services were running points were being earned by the teams for successfully providing a service to customers, for consuming other teams microservices, as well as successful use of their own microservices by other teams. Things then got rather interesting as chaos was injected into the environment by the disgruntled employees who left the day before.

Monitor, monitor, monitor, and then monitor some more….

At this point the game pivoted from the initial deployment of the native services, to a need to keep the lights on and react to a number of external factors, such as hacking events where our shiny new solutions were damaged, as well as variable user activity that needed to be accommodated, all the time keeping an eye on the microservices published by other teams so we could see which were the most reliable, lowest latency etc. X-ray was a service I’d not previously used but it was fantastic for viewing service health, identifying trouble spots that were either failing or where latency was increasing, along with the usual services like CloudWatch.

Overall everyone had an enjoyable day getting more hands on with the services as they further upskilled in microservices. It’s great personal development for the individuals and is part of our ongoing investment in upskilling our staff and providing capable people with hands on experience to successfully deliver to our customers.

If anyone would like a discussion about the sort of things we do within the Atos AWS coaching Hub feel free to get in touch via LinkedIn. Thank you to all our AWS colleagues for running the event and Atos colleagues for participating and making it such a success!